A while back, I read about some ransomware that, instead of leaving a ransom note, accessed the speech functionality of Windows systems to tell the user that the files on their system had been encrypted. Hearing that, I started doing some research and put together a file that can play selected speech through the speakers of my laptop. I thought it might be fun to take a different approach with this blog post and share the file.

Copy-paste the below file into an editor window, and save the file as 'speak.vbs', or (as I did, 'deadpool.vbs') on your desktop. Then simply double-click the file.

dim sapi

set sapi=createobject("sapi.spvoice")

Set sapi.Voice = sapi.GetVoices.Item(0)

sapi.Rate = 2

sapi.Volume = 100

sapi.speak "This shit's gonna have NUTS in it!"

sapi.speak "It's time to make the chimichangas!"

sapi.speak "hashtag drive by"

So, Windows 7 has just one 'voice', so there's no realy need for line 3; Windows 10 has two voices by default, so change the '0' to a '1' to switch things up a bit.

The cool thing is that you can attach a file like this to different actions on your system, or you can have fun with your friends (a la the days of SubSeven) and put a file like this in one of the autorun locations on their system. Ah, good times!

The Windows Incident Response Blog is dedicated to the myriad information surrounding and inherent to the topics of IR and digital analysis of Windows systems. This blog provides information in support of my books; "Windows Forensic Analysis" (1st thru 4th editions), "Windows Registry Forensics", as well as the book I co-authored with Cory Altheide, "Digital Forensics with Open Source Tools".

Thursday, September 28, 2017

Tuesday, September 26, 2017

Updates

ADS

It's been some time since I've had an opportunity to talk about NTFS alternate data streams (ADS), but the folks at Red Canary recently published an article where ADSs take center stage. NTFS alternate data streams go back a long way, all the way to the first versions of NTFS, and were a 'feature' included to support resource forks in the HFS file system. I'm sure that will all of the other possible artifacts on Windows systems today, ADSs are not something that is talked about at great length, but it is interesting how applications on Windows systems make use of ADSs. What this means to examiners is that they really need to understand the context of those ADSs...for example, what happens if you find an ADS named "ZoneIdentifier" attached to an MS Word document or to a PNG file, and it is much larger than 26 bytes?

Equifax

Some thoughts on Equifax...

According to the Equifax announcement, the breach was discovered on 29 July 2017. Having performed incident response activities for close to 20 years, it's no surprise to me at all that it took until 7 Sept for the announcement to be made. Seriously. This stuff takes time to work out. Something that does concern me is the following statement:

The company has found no evidence of unauthorized activity on Equifax's core consumer or commercial credit reporting databases.

Like I said, I've been responding to incidents for some time, and I've used that very same language when reporting findings to clients. However, most often that's followed by a statement along the lines of, "...due to a lack of instrumentation and visibility." And that's the troubling part of this incident to me...here's an organization that collects vast amounts of extremely sensitive data in one place, and they have a breach that went undetected for 3 months.

Unfortunately, I'm afraid that this incident won't serve as an object lesson to other organizations, simply because of the breaches we've seen over the past couple of years...and more importantly, just the past couple of months...that similarly haven't served that purpose. For a while now, I've used the analogy of a boxing ring, with a line of guys mounting the stairs one at a time to step into the ring. As you're standing in line, you see that these guys are all getting into the ring, and they apparently have no conditioning or training, nor have they practiced...and each one that steps into the ring gets pounded by the professional opponent. And yet, even seeing this, no one thinks about defending themselves, through conditioning, training, or practice, to survive beyond the first punch. You can see it happening in front of you, with 10 or 20 guys in line ahead of you, and yet no one does anything but stand there in the line with their arms at their sides, apparently oblivious to their fate.

Threat Intelligence

Sergio/@cnoanalysis recently tweeted something that struck me as profound...that threat intelligence needs to be treated as a consumer product.

He's right...take this bit of threat intelligence, for example. This is solely an example, and not at all intended to say that anyone's doing anything wrong, but it is a good example of what Sergio was referring to in his short but elegant tweet. While some valuable and useful/usable threat intelligence can be extracted from the article, as is the case with articles from other organizations that are shared as "threat intelligence", this comes across more as a research project than a consumer product. After all, how does someone who owns and manages an IT infrastructure make use of the information in the various figures? How do illustrations of assembly language code help someone determine if this group has compromised their network?

Web Shells

Bart Blaze created a nice repository of PHP backdoors, which also includes links to other web shell resources. This is a great resource for DFIR folks who have encountered such things.

Be sure to update your Yara rules!

Sharing is Caring

Speaking of Yara rules, the folks at NViso posted a Yara rule for detecting CCleaner 5.33, which is the version of the popular anti-forensics tool that was compromised to include a backdoor.

Going a step beyond the Yara rule, the folks at Talos indicate in their analysis of the compromised CCleaner that the malware payload is maintained in the Registry, in the path:

HKLM\Software\Microsoft\Windows NT\CurrentVersion\WbemPerf\001 - 004

Unfortunately, the Talos write-up doesn't specify if 001 is a key or value...yes, I know that for many this seems pedantic, but it makes a difference. A pretty big difference. With respect to automated tools for live examination of systems (Powershell, etc.), as well as post-mortem examinations (RegRipper, etc.), the differences in coding the applications to look for a key vs. a value could mean the difference between detection and not.

Ransomware

The Carbon Black folks had a couple of interesting blog posts on the topic of ransomware recently, one about earning quick money, and the other about predictions regarding the evolution of ransomware. From the second Cb post, prediction #3 was interesting to me, in part because this is a question I saw clients ask starting in 2016. More recently, just a couple of months ago, I was on a client call set up by corporate counsel, when one of the IT staff interrupted the kick off of the call and wanted to know if sensitive data had been exfiltrated; rather than seeing this as a disruption of the call, this illustrated to me the paramount concern behind the question. However, the simple fact is that even in 2017, organizations that are hit with these breaches (evidently some regulatory bodies are considering a ransomware infection to be a "breach") are neither prepared for a ransomware infection, nor are they instrumented to answer the question themselves.

I suspect that a great many organizations are relying on their consulting staffs to tell them if the variant of ransomware has demonstrated an ability to exfiltrate data during testing, but that assumption is fraught with issues, as well. For example, to assume that someone else has seen and tested that variant of ransomware, particularly when you're (as the "victim") are unable to provide a copy of the ransomware executable. Further, what if the testing environment did not include any data or files that the variant would have wanted to, or was programmed to, exfil from the environment?

Looking at the Cb predictions, I'm not concerned with tracking them to see if they come true or not...my concern is, how will I, as an incident responder, address questions from clients who are not at all instrumented to detect the predicted evolution of ransomware?

On the subject of ransomware, Kaspersky identified a variant dubbed "nRansom", named as such because instead of demanding bitcoin, the bad guys demand nude photographs of the victim.

Attack of the Features

It turns out the MS Word has another feature that the bad guys have found and exploited, once again leaving the good folk using the application to catch up.

From the blog post:

The experts highlighted that there is no description for Microsoft Office documentation provides basically no description of the INCLUDEPICTURE field.

Nice.

It's been some time since I've had an opportunity to talk about NTFS alternate data streams (ADS), but the folks at Red Canary recently published an article where ADSs take center stage. NTFS alternate data streams go back a long way, all the way to the first versions of NTFS, and were a 'feature' included to support resource forks in the HFS file system. I'm sure that will all of the other possible artifacts on Windows systems today, ADSs are not something that is talked about at great length, but it is interesting how applications on Windows systems make use of ADSs. What this means to examiners is that they really need to understand the context of those ADSs...for example, what happens if you find an ADS named "ZoneIdentifier" attached to an MS Word document or to a PNG file, and it is much larger than 26 bytes?

Equifax

Some thoughts on Equifax...

According to the Equifax announcement, the breach was discovered on 29 July 2017. Having performed incident response activities for close to 20 years, it's no surprise to me at all that it took until 7 Sept for the announcement to be made. Seriously. This stuff takes time to work out. Something that does concern me is the following statement:

The company has found no evidence of unauthorized activity on Equifax's core consumer or commercial credit reporting databases.

Like I said, I've been responding to incidents for some time, and I've used that very same language when reporting findings to clients. However, most often that's followed by a statement along the lines of, "...due to a lack of instrumentation and visibility." And that's the troubling part of this incident to me...here's an organization that collects vast amounts of extremely sensitive data in one place, and they have a breach that went undetected for 3 months.

Unfortunately, I'm afraid that this incident won't serve as an object lesson to other organizations, simply because of the breaches we've seen over the past couple of years...and more importantly, just the past couple of months...that similarly haven't served that purpose. For a while now, I've used the analogy of a boxing ring, with a line of guys mounting the stairs one at a time to step into the ring. As you're standing in line, you see that these guys are all getting into the ring, and they apparently have no conditioning or training, nor have they practiced...and each one that steps into the ring gets pounded by the professional opponent. And yet, even seeing this, no one thinks about defending themselves, through conditioning, training, or practice, to survive beyond the first punch. You can see it happening in front of you, with 10 or 20 guys in line ahead of you, and yet no one does anything but stand there in the line with their arms at their sides, apparently oblivious to their fate.

Threat Intelligence

Sergio/@cnoanalysis recently tweeted something that struck me as profound...that threat intelligence needs to be treated as a consumer product.

He's right...take this bit of threat intelligence, for example. This is solely an example, and not at all intended to say that anyone's doing anything wrong, but it is a good example of what Sergio was referring to in his short but elegant tweet. While some valuable and useful/usable threat intelligence can be extracted from the article, as is the case with articles from other organizations that are shared as "threat intelligence", this comes across more as a research project than a consumer product. After all, how does someone who owns and manages an IT infrastructure make use of the information in the various figures? How do illustrations of assembly language code help someone determine if this group has compromised their network?

Web Shells

Bart Blaze created a nice repository of PHP backdoors, which also includes links to other web shell resources. This is a great resource for DFIR folks who have encountered such things.

Be sure to update your Yara rules!

Sharing is Caring

Speaking of Yara rules, the folks at NViso posted a Yara rule for detecting CCleaner 5.33, which is the version of the popular anti-forensics tool that was compromised to include a backdoor.

Going a step beyond the Yara rule, the folks at Talos indicate in their analysis of the compromised CCleaner that the malware payload is maintained in the Registry, in the path:

HKLM\Software\Microsoft\Windows NT\CurrentVersion\WbemPerf\001 - 004

Unfortunately, the Talos write-up doesn't specify if 001 is a key or value...yes, I know that for many this seems pedantic, but it makes a difference. A pretty big difference. With respect to automated tools for live examination of systems (Powershell, etc.), as well as post-mortem examinations (RegRipper, etc.), the differences in coding the applications to look for a key vs. a value could mean the difference between detection and not.

Ransomware

The Carbon Black folks had a couple of interesting blog posts on the topic of ransomware recently, one about earning quick money, and the other about predictions regarding the evolution of ransomware. From the second Cb post, prediction #3 was interesting to me, in part because this is a question I saw clients ask starting in 2016. More recently, just a couple of months ago, I was on a client call set up by corporate counsel, when one of the IT staff interrupted the kick off of the call and wanted to know if sensitive data had been exfiltrated; rather than seeing this as a disruption of the call, this illustrated to me the paramount concern behind the question. However, the simple fact is that even in 2017, organizations that are hit with these breaches (evidently some regulatory bodies are considering a ransomware infection to be a "breach") are neither prepared for a ransomware infection, nor are they instrumented to answer the question themselves.

I suspect that a great many organizations are relying on their consulting staffs to tell them if the variant of ransomware has demonstrated an ability to exfiltrate data during testing, but that assumption is fraught with issues, as well. For example, to assume that someone else has seen and tested that variant of ransomware, particularly when you're (as the "victim") are unable to provide a copy of the ransomware executable. Further, what if the testing environment did not include any data or files that the variant would have wanted to, or was programmed to, exfil from the environment?

Looking at the Cb predictions, I'm not concerned with tracking them to see if they come true or not...my concern is, how will I, as an incident responder, address questions from clients who are not at all instrumented to detect the predicted evolution of ransomware?

On the subject of ransomware, Kaspersky identified a variant dubbed "nRansom", named as such because instead of demanding bitcoin, the bad guys demand nude photographs of the victim.

Attack of the Features

It turns out the MS Word has another feature that the bad guys have found and exploited, once again leaving the good folk using the application to catch up.

From the blog post:

The experts highlighted that there is no description for Microsoft Office documentation provides basically no description of the INCLUDEPICTURE field.

Nice.

Tuesday, September 05, 2017

Stuff

QoTD

The quote of the day comes from Corey Tomlinson, content manager at Nuix. In a recent blog post, Corey included the statement:

The best way to avoid mistakes or become more effective is to learn from collective experience, not just your own.

You'll need to read the entire post to get the context of the statement, but the point is that this is something that applies to SO much within the DFIR and threat hunting communit(y|ies). Whether you're sharing experiences solely within your team, or you're engaging with others outside of your team and cross-pollinating, this is one of the best ways to extend and expand your effectiveness, not only as a DFIR analyst, but as a threat hunter, as well as an intel analyst. None of us knows nor has seen everything, but together we can get a much wider aperture and insight.

Hindsight

Ryan released an update to hindsight recently...if you do any system analysis and encounter Chrome, you should really check it out. I've used hindsight several times quite successfully...it's easy to use, and the returned data is easy to interpret and incorporate into a timeline. In one case, I used it to demonstrate that a user had bypassed the infrastructure protections put in place by going around the Exchange server and using Chrome to access their AOL email...launching an attachment infected their system with ransomware.

Thanks, Ryan, for an extremely useful and valuable tool!

It's About Time

I ran across this blog post recently about time stamps and Outlook email attachments, and that got me thinking about how many sources and formats for 'time' there are on Windows systems.

Microsoft has a wonderful page available that discusses various times, such as File Times. From that same page, you can get more information about MS-DOS Date and Time, which we find embedded in shell items (yes, as in Shellbags).

If nothing else, this really reminds me of the various aspects of time that we have to consider and deal with when conducting DFIR analysis. We have to consider the source, and how mutable that source may be. We have to consider the context of the time stamp (AppCompatCache).

Using Every Part of The Buffalo

Okay, so I stole that section title from a paper that Jesse Kornblum wrote a while back; however, I'm not going to be referring to memory, in this case. Rather, I'm going to be looking at document metadata. Not long ago, the folks at ProofPoint posted a blog entry that discussed a campaign they were seeing that seemed very similar to something they'd seen three years ago. Specifically, they looked at the metadata in Windows shortcut (LNK) files and noted something that was identical between the 2014 and 2017 campaigns. Reading this, I thought I'd take a closer look at some of the artifacts, as the authors included hashes for the .docx ("need help.docx") file, as well as for a LNK file in their write-up. I was able to locate copies of both online, and begin my analysis.

Once I downloaded the .docx file, I opened it in 7Zip and exported all of the files and folders, and quickly found the OLE object they referred to in the "word\embeddings\oleObject.bin" file. Parsing this file with oledmp.pl, I found a couple of things...first, the OLE date embedded in the file is "10.08.2017, 15:46:51", giving us a reference time stamp. At this point we don't know if the time stamp has been modified, or what...so let's just put that aside for the moment.

Next, I at the available streams in the OLE file:

Root Entry Date: 10.08.2017, 15:46:51 CLSID: 0003000C-0000-0000-C000-000000000046

1 F.. 6 \ ObjInfo

2 F.. 44511 \ Ole10Native

Hhhmmm...that looks interesting.

Okay, so we see what they were talking about in the ProofPoint post...right there at offset 0x9c is "4C", the beginning of the embedded LNK file. Very cool.

This document appears to be identical to what was discussed in the ProofPoint blog post, at figure 16. In the figure above, we can see a reference to "VID_20170809_1102376.mp4.lnk", and the "word\document.xml" file contains the text, "this is what we recorded, double click on the video icon to view it. The video is about 15 minutes."

I'd also downloaded the file from the IOCs section of the blog post referred to as "LNK object", and parsed it. Most of the metadata was as one would expect...the time stamps embedded in the LNK file referred to the PowerShell executable from that system, do it was uninteresting. However, there were a couple of items of interest:

machineID john-win764

birth_obj_id_node 00:0c:29:ac:13:81 (VMWare)

vol_sn CC9C-E694

We can see the volume serial number that was listed in the ProofPoint blog, and we see the MAC address, as well. An OUI lookup of the MAC address tells us that it's assigned to VMWare interface. Does this mean that the development environment is a VMWare guest? Not necessarily. I'd done research in the past and found that LNK files created on my host system, when I had VMWare installed, would "pick up" the MAC address of the VMWare interface on the host. What was interesting in that research was that the LNK file remained and functioned correctly, long after I had removed VMWare and installed VirtualBox. Not surprising, I know...but it did verify that at one point, when the LNK file was created, I had had VMWare installed on my system.

As a side note, I have to say that this is the first time that I've seen an organization publicizing threat intel and incorporating metadata from artifacts sent to the victim. I'm sure that this may have been done before, and honestly, I can't see everything...but I did find this to be very extremely interesting that the authors would not only parse the LNK file metadata, but tie it back to a previous (2014) campaign. That is very cool!

In the above metadata, we also see that the NetBIOS name of the system on which the LNK object was created is "john-win764". Something not visible in the metadata but easily found via strings is the SID, S-1-5-21-3345294922-2424827061-887656146-1000.

This also gives us some very interesting elements that we can use to put together a Yara rule and submit as a VT retrohunt, and determine if there are other similar LNK files that originated from the same system. From there, hopefully we can tie them to specific campaigns.

Okay, so what does all this get us? Well, as an incident responder in the private sector, attribution is a distraction. Yes, there are folks who ask about it, but honestly, when you're having to understand a breach so that you can brief your board, your shareholders, and your clients as to the impact, the "who" isn't as important as the "what", specifically, "what is the risk/impact?" However, if you're in the intel side of things, the above elements can assist you with attribution, particularly when it's been developed further through not only your own stores, but also via available resources such as VirusTotal.

Ransomware

On the ransomware front, there's moregood news!!

Not only have recently-observed Cerber variants been seen stealing credentials and Bitcoin wallets, but Spora is reportedly now able to also steal credentials, with the added whammy of logging key strokes! The article also goes on to state that the ransomware can also access browser history.

Over the past 18 months, the ransomware cases that I've been involved with have changed directions markedly. Initially, I thought folks wanted to know the infection vector so that they could take action...with no engagement beyond the report (such is the life of DFIR), it's impossible to tell how the information was used. However, something that started happening quite a bit was that questions regarding access to sensitive (PHI, PII, PCI) data were being asked. Honestly, my first thought...and likely the thought of any number of analysts...was, "...it's ransomware...". But then I started to really think about the question, and I quickly realized that we didn't have the instrumentation and visibility to answer that question. Only with some recent cases did clients have Process Tracking enabled in the Windows Event Log...while capture of the full command line wasn't enabled, we did at least get some process names that corresponded closely to what had been seen via testing.

So, in short, without instrumentation and visibility, the answer to the question, "....was sensitive data accessed and/or exfiltrated?" is "we don't know."

However, one thing is clear...there are folks out there who are exploring ways to extend and evolve the ransomware business model. Over the past two years we've seen evolutions in ransomware itself, such as this blog post from Kevin Strickland of SecureWorks. The business model of ransomware has also evolved, with players producing ransomware-as-a-service. In short, this is going to continue to evolve and become an even greater threat to organizations.

The quote of the day comes from Corey Tomlinson, content manager at Nuix. In a recent blog post, Corey included the statement:

The best way to avoid mistakes or become more effective is to learn from collective experience, not just your own.

You'll need to read the entire post to get the context of the statement, but the point is that this is something that applies to SO much within the DFIR and threat hunting communit(y|ies). Whether you're sharing experiences solely within your team, or you're engaging with others outside of your team and cross-pollinating, this is one of the best ways to extend and expand your effectiveness, not only as a DFIR analyst, but as a threat hunter, as well as an intel analyst. None of us knows nor has seen everything, but together we can get a much wider aperture and insight.

Hindsight

Ryan released an update to hindsight recently...if you do any system analysis and encounter Chrome, you should really check it out. I've used hindsight several times quite successfully...it's easy to use, and the returned data is easy to interpret and incorporate into a timeline. In one case, I used it to demonstrate that a user had bypassed the infrastructure protections put in place by going around the Exchange server and using Chrome to access their AOL email...launching an attachment infected their system with ransomware.

Thanks, Ryan, for an extremely useful and valuable tool!

It's About Time

I ran across this blog post recently about time stamps and Outlook email attachments, and that got me thinking about how many sources and formats for 'time' there are on Windows systems.

Microsoft has a wonderful page available that discusses various times, such as File Times. From that same page, you can get more information about MS-DOS Date and Time, which we find embedded in shell items (yes, as in Shellbags).

If nothing else, this really reminds me of the various aspects of time that we have to consider and deal with when conducting DFIR analysis. We have to consider the source, and how mutable that source may be. We have to consider the context of the time stamp (

Using Every Part of The Buffalo

Okay, so I stole that section title from a paper that Jesse Kornblum wrote a while back; however, I'm not going to be referring to memory, in this case. Rather, I'm going to be looking at document metadata. Not long ago, the folks at ProofPoint posted a blog entry that discussed a campaign they were seeing that seemed very similar to something they'd seen three years ago. Specifically, they looked at the metadata in Windows shortcut (LNK) files and noted something that was identical between the 2014 and 2017 campaigns. Reading this, I thought I'd take a closer look at some of the artifacts, as the authors included hashes for the .docx ("need help.docx") file, as well as for a LNK file in their write-up. I was able to locate copies of both online, and begin my analysis.

Once I downloaded the .docx file, I opened it in 7Zip and exported all of the files and folders, and quickly found the OLE object they referred to in the "word\embeddings\oleObject.bin" file. Parsing this file with oledmp.pl, I found a couple of things...first, the OLE date embedded in the file is "10.08.2017, 15:46:51", giving us a reference time stamp. At this point we don't know if the time stamp has been modified, or what...so let's just put that aside for the moment.

Next, I at the available streams in the OLE file:

Root Entry Date: 10.08.2017, 15:46:51 CLSID: 0003000C-0000-0000-C000-000000000046

1 F.. 6 \ ObjInfo

2 F.. 44511 \ Ole10Native

Hhhmmm...that looks interesting.

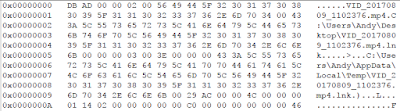

|

| Excerpt of oleObject.bin file |

Okay, so we see what they were talking about in the ProofPoint post...right there at offset 0x9c is "4C", the beginning of the embedded LNK file. Very cool.

This document appears to be identical to what was discussed in the ProofPoint blog post, at figure 16. In the figure above, we can see a reference to "VID_20170809_1102376.mp4.lnk", and the "word\document.xml" file contains the text, "this is what we recorded, double click on the video icon to view it. The video is about 15 minutes."

I'd also downloaded the file from the IOCs section of the blog post referred to as "LNK object", and parsed it. Most of the metadata was as one would expect...the time stamps embedded in the LNK file referred to the PowerShell executable from that system, do it was uninteresting. However, there were a couple of items of interest:

machineID john-win764

birth_obj_id_node 00:0c:29:ac:13:81 (VMWare)

vol_sn CC9C-E694

We can see the volume serial number that was listed in the ProofPoint blog, and we see the MAC address, as well. An OUI lookup of the MAC address tells us that it's assigned to VMWare interface. Does this mean that the development environment is a VMWare guest? Not necessarily. I'd done research in the past and found that LNK files created on my host system, when I had VMWare installed, would "pick up" the MAC address of the VMWare interface on the host. What was interesting in that research was that the LNK file remained and functioned correctly, long after I had removed VMWare and installed VirtualBox. Not surprising, I know...but it did verify that at one point, when the LNK file was created, I had had VMWare installed on my system.

As a side note, I have to say that this is the first time that I've seen an organization publicizing threat intel and incorporating metadata from artifacts sent to the victim. I'm sure that this may have been done before, and honestly, I can't see everything...but I did find this to be very extremely interesting that the authors would not only parse the LNK file metadata, but tie it back to a previous (2014) campaign. That is very cool!

In the above metadata, we also see that the NetBIOS name of the system on which the LNK object was created is "john-win764". Something not visible in the metadata but easily found via strings is the SID, S-1-5-21-3345294922-2424827061-887656146-1000.

This also gives us some very interesting elements that we can use to put together a Yara rule and submit as a VT retrohunt, and determine if there are other similar LNK files that originated from the same system. From there, hopefully we can tie them to specific campaigns.

Okay, so what does all this get us? Well, as an incident responder in the private sector, attribution is a distraction. Yes, there are folks who ask about it, but honestly, when you're having to understand a breach so that you can brief your board, your shareholders, and your clients as to the impact, the "who" isn't as important as the "what", specifically, "what is the risk/impact?" However, if you're in the intel side of things, the above elements can assist you with attribution, particularly when it's been developed further through not only your own stores, but also via available resources such as VirusTotal.

Ransomware

On the ransomware front, there's more

Not only have recently-observed Cerber variants been seen stealing credentials and Bitcoin wallets, but Spora is reportedly now able to also steal credentials, with the added whammy of logging key strokes! The article also goes on to state that the ransomware can also access browser history.

Over the past 18 months, the ransomware cases that I've been involved with have changed directions markedly. Initially, I thought folks wanted to know the infection vector so that they could take action...with no engagement beyond the report (such is the life of DFIR), it's impossible to tell how the information was used. However, something that started happening quite a bit was that questions regarding access to sensitive (PHI, PII, PCI) data were being asked. Honestly, my first thought...and likely the thought of any number of analysts...was, "...it's ransomware...". But then I started to really think about the question, and I quickly realized that we didn't have the instrumentation and visibility to answer that question. Only with some recent cases did clients have Process Tracking enabled in the Windows Event Log...while capture of the full command line wasn't enabled, we did at least get some process names that corresponded closely to what had been seen via testing.

So, in short, without instrumentation and visibility, the answer to the question, "....was sensitive data accessed and/or exfiltrated?" is "we don't know."

However, one thing is clear...there are folks out there who are exploring ways to extend and evolve the ransomware business model. Over the past two years we've seen evolutions in ransomware itself, such as this blog post from Kevin Strickland of SecureWorks. The business model of ransomware has also evolved, with players producing ransomware-as-a-service. In short, this is going to continue to evolve and become an even greater threat to organizations.

Saturday, September 02, 2017

Updates

Office Maldocs, SANS Macros

HelpNetSecurity had a fascinating blog post recently on a change in tactics that they'd observed (actually, it originated from a SANS handler diary post), in that an adversary was using a feature built in to MS Word documents to infect systems, rather than embedding malicious macros in the documents. The "feature" is one in which links embedded in the document are updated when the document is opened. In the case of the observed activity, the link update downloaded an RTF document, and things just sort of took off from there.

I've checked my personal system (Office 2010) as well as my corp system (Office 2016), and in both cases, this feature is enabled by default.

This is a great example of an evolution of behavior, and illustrates that "arms race" that is going on every day in the DFIR community. We can't detect all possible means of compromise...quite frankly, I don't believe that there's a list out there that we can use as a basis, even if we could. So, the blue team perspective is to instrument in a way that makes sense so that we can detect these things, and then respond as thoroughly as possible.

WMI Persistence

TrendMicro recently published a blog post that went into some detail discussing WMI persistence observed with respect to cryptocurrency miner infections. While such infections aren't necessarily damaging to an organization (I've observed several that went undetected for months...), in the sense that they don't deprive or restrict the organization's ability to access their own assets and information, they are the result of someone breaching the perimeter and obtaining access to a system and it's resources.

Matt Graeber tweeted that on Windows 10, the creation of the WMI persistence mechanism appears in the Windows Event Logs. While I understand that organizations cannot completely ignore their investment in systems and infrastructure, there needs to be some means by which older OSs are rolled out of inventory as they become obviated by the manufacturer. I have seen, or known that others have seen, active Windows XP and 2003 systems as recently as August, 2017; again, I completely understand that organizations have invested a great deal of money, time, and other resources into maintaining the infrastructure that they'd developed (or legacy infrastructures), but from an information security perspective, there needs to be any eye toward (and an investment in) updating systems that have reached end-of-life.

I'd had a blog post published on my previous employer's corporate site last year; we'd discovered a similar persistence mechanism as a result of creating a mini-timeline to analyze one of several systems infected with Samas ransomware. In this particular case, prior to the system being compromised and used as a jump host to map the network and deploy the ransomware, the system had been compromised via the same vulnerability and a cryptocoin miner installed. There was a WMI persistence mechanism created at about the same time, and another artifact (i.e., the LastWrite time on the Win32_ClockProvider Registry key had been modified...) on the system pointed us in that direction.

InfoSec Program Maturity

Going back just a bit to the topic of the maturity of IT processes and by extension, infosec programs, with respect to ransomware...one of the things I've seen a lot of over the past year to 18 months, beyond the surge in ransomware cases that started in Feb, 2016, is the questions that clients who've been hit with ransomware have been asking. These have actually been really good questions, such as, "...was sensitive data exposed or exfiltrated?" In most instances with ransomware cases, the immediate urge was to respond, "...no, it was ransomware...", but pausing for a bit, the real answer was, "...we don't know." Why didn't we know? We had no way of knowing, because the systems weren't instrumented, and we didn't have the necessary visibility to be able to answer the questions. Not just definitively...at all.

More recently with the NotPetya issues, we'd see where the client had Process Tracking enabled in the Windows Event Log, so that the Security Event Log was populated with pertinent records, albeit without the full command line. As such, we could see the sequence of commands that were associated with NotPetya, and we could say with confidence that no additional commands have been run, but without the full command lines, we couldn't stated definitively that nothing else untoward had also been done.

So, some things to consider when thinking about or discussing the maturity of your IT and infosec programs include asking yourself, "...what are the questions we would have in the case of this type of incident?", and then, "...do we have the necessary instrumentation and visibility to answer those questions?" Anyone who has sensitive data (PHI, PII, PCI, etc...) is going to have the question of "...was sensitive data exposed?", so the question would be, how would you determine that? Were you tracking full process command lines to determine if sensitive data was marshaled and prepared for exfil?

Another aspect of this to consider is, if this information is being tracked because you do, in fact, have the necessary instrumentation, what's your aperture? Are you covering just the domain controllers, or have you included other systems, including workstations? Then, depending on what you're collecting, how quickly can you answer the questions? Is it something you can do easily, because you've practiced and tweaked the process, or is it something you haven't even tried yet?

Something that's demonstrated (to me) on a daily basis is how mature the bad guy's process is, and I'm not just referring to targeted nation-state threat actors. I've seen ransomware engagements where the bad guy got in to an RDP server, and within 10 min escalated privileges (his exploit included the CVE number in the file name), deployed ransomware and got out. There are plenty of blog posts that talk about how targeted threat actors have been observed reacting to stimulus (i.e., attempts at containment, indications of being detected, etc.), and returning to infrastructures following eradication and remediation.

WEVTX

The folks at JPCERT recently (June) published their research on using Windows Event Logs to track lateral movement within an infrastructure. This is really good stuff, but is dependent upon system owners properly configuring systems in order to actually generate the log records they refer to in the report (we just talked about infosec programs and visibility above...).

This is also an inherent issue with SIEMs...no amount of technology will be useful if you're not populating it with the appropriate information.

New RegRipper Plugin

James shared a link to a one-line PowerShell command designed to detect the presence of the CIA's AngelFire infection. After reading this, it took me about 15 min to write a RegRipper plugin for it and upload it to the Github repository.

HelpNetSecurity had a fascinating blog post recently on a change in tactics that they'd observed (actually, it originated from a SANS handler diary post), in that an adversary was using a feature built in to MS Word documents to infect systems, rather than embedding malicious macros in the documents. The "feature" is one in which links embedded in the document are updated when the document is opened. In the case of the observed activity, the link update downloaded an RTF document, and things just sort of took off from there.

I've checked my personal system (Office 2010) as well as my corp system (Office 2016), and in both cases, this feature is enabled by default.

This is a great example of an evolution of behavior, and illustrates that "arms race" that is going on every day in the DFIR community. We can't detect all possible means of compromise...quite frankly, I don't believe that there's a list out there that we can use as a basis, even if we could. So, the blue team perspective is to instrument in a way that makes sense so that we can detect these things, and then respond as thoroughly as possible.

WMI Persistence

TrendMicro recently published a blog post that went into some detail discussing WMI persistence observed with respect to cryptocurrency miner infections. While such infections aren't necessarily damaging to an organization (I've observed several that went undetected for months...), in the sense that they don't deprive or restrict the organization's ability to access their own assets and information, they are the result of someone breaching the perimeter and obtaining access to a system and it's resources.

Matt Graeber tweeted that on Windows 10, the creation of the WMI persistence mechanism appears in the Windows Event Logs. While I understand that organizations cannot completely ignore their investment in systems and infrastructure, there needs to be some means by which older OSs are rolled out of inventory as they become obviated by the manufacturer. I have seen, or known that others have seen, active Windows XP and 2003 systems as recently as August, 2017; again, I completely understand that organizations have invested a great deal of money, time, and other resources into maintaining the infrastructure that they'd developed (or legacy infrastructures), but from an information security perspective, there needs to be any eye toward (and an investment in) updating systems that have reached end-of-life.

I'd had a blog post published on my previous employer's corporate site last year; we'd discovered a similar persistence mechanism as a result of creating a mini-timeline to analyze one of several systems infected with Samas ransomware. In this particular case, prior to the system being compromised and used as a jump host to map the network and deploy the ransomware, the system had been compromised via the same vulnerability and a cryptocoin miner installed. There was a WMI persistence mechanism created at about the same time, and another artifact (i.e., the LastWrite time on the Win32_ClockProvider Registry key had been modified...) on the system pointed us in that direction.

InfoSec Program Maturity

Going back just a bit to the topic of the maturity of IT processes and by extension, infosec programs, with respect to ransomware...one of the things I've seen a lot of over the past year to 18 months, beyond the surge in ransomware cases that started in Feb, 2016, is the questions that clients who've been hit with ransomware have been asking. These have actually been really good questions, such as, "...was sensitive data exposed or exfiltrated?" In most instances with ransomware cases, the immediate urge was to respond, "...no, it was ransomware...", but pausing for a bit, the real answer was, "...we don't know." Why didn't we know? We had no way of knowing, because the systems weren't instrumented, and we didn't have the necessary visibility to be able to answer the questions. Not just definitively...at all.

More recently with the NotPetya issues, we'd see where the client had Process Tracking enabled in the Windows Event Log, so that the Security Event Log was populated with pertinent records, albeit without the full command line. As such, we could see the sequence of commands that were associated with NotPetya, and we could say with confidence that no additional commands have been run, but without the full command lines, we couldn't stated definitively that nothing else untoward had also been done.

So, some things to consider when thinking about or discussing the maturity of your IT and infosec programs include asking yourself, "...what are the questions we would have in the case of this type of incident?", and then, "...do we have the necessary instrumentation and visibility to answer those questions?" Anyone who has sensitive data (PHI, PII, PCI, etc...) is going to have the question of "...was sensitive data exposed?", so the question would be, how would you determine that? Were you tracking full process command lines to determine if sensitive data was marshaled and prepared for exfil?

Another aspect of this to consider is, if this information is being tracked because you do, in fact, have the necessary instrumentation, what's your aperture? Are you covering just the domain controllers, or have you included other systems, including workstations? Then, depending on what you're collecting, how quickly can you answer the questions? Is it something you can do easily, because you've practiced and tweaked the process, or is it something you haven't even tried yet?

Something that's demonstrated (to me) on a daily basis is how mature the bad guy's process is, and I'm not just referring to targeted nation-state threat actors. I've seen ransomware engagements where the bad guy got in to an RDP server, and within 10 min escalated privileges (his exploit included the CVE number in the file name), deployed ransomware and got out. There are plenty of blog posts that talk about how targeted threat actors have been observed reacting to stimulus (i.e., attempts at containment, indications of being detected, etc.), and returning to infrastructures following eradication and remediation.

WEVTX

The folks at JPCERT recently (June) published their research on using Windows Event Logs to track lateral movement within an infrastructure. This is really good stuff, but is dependent upon system owners properly configuring systems in order to actually generate the log records they refer to in the report (we just talked about infosec programs and visibility above...).

This is also an inherent issue with SIEMs...no amount of technology will be useful if you're not populating it with the appropriate information.

New RegRipper Plugin

James shared a link to a one-line PowerShell command designed to detect the presence of the CIA's AngelFire infection. After reading this, it took me about 15 min to write a RegRipper plugin for it and upload it to the Github repository.

Subscribe to:

Posts (Atom)