Not long ago, Florian Roth shared some fascinating thoughts via his post, The Bicycle of the Forensic Analyst, in which he discusses increases in efficiency in the forensic review process. I say "review" here, because "analysis" is a term that is often used incorrectly, but that's for another time. Specifically, Florian's post discusses efficiency in the forensic review process during incident response.

After reading Florian's article, I had some thoughts that I wanted share to that would extend what he's referring to, in part because I've seen, and continue to see the need for something just like what is discussed. I've shared my own thoughts on this topic previously.

My initial foray into digital forensics was not terribly different from Florian's, as he describes in his article. For me, it wasn't a lab crammed with equipment and two dozen drives, but the image his words create and perhaps even the sense of "where do I start?" was likely similar. At the same time, this was also a very manual process...open images in a viewer, or access data within images via some other means, and begin processing the data. Depending upon the circumstances, we might access and view the original data to verify that it *can* be viewed, and at that point, extract some modicum of data (referred to as "triage data") to begin the initial parsing and data presentation process before kicking off the full acquisition process. But again, this has often been a very manual process, and even with checklists, it can be tedious, time consuming, and prone to errors.

Over the years, analysts have adopted something similar to what Florian describes, using such tools as Yara, Thor (Lite), log2timeline/plaso, or CyLR. These are all great tools that provide considerable capabilities, making the analyst's job easier when used appropriately and correctly. I should note that several years ago, extensions for Yara and RegRipper were added to the Nuix Workstation product, putting the functionality and capability of both tools at the fingertips of investigators, allowing them to significantly extend their investigations from within the Nuix product. This is an example of how a commercial product provided the ability of its users to leverage the freeware tools in their parsing and data presentation process.

So, where does the "bicycle" come in? Florian said:

Processing the large stack of disk images manually felt like being deprived of something essential: the bicycle of forensic analysts.

His point is, if we have the means for creating vast efficiencies in our work, alleviating ourselves of manual, time-consuming, error-prone processes, why don't we do so? Why not leverage scanners to reduce our overhead and make our jobs easier?

So, what was achieved through Florian's use of scanners?

The automatic processing of the images accelerated our analysis a lot. From a situation where we processed only three disk images daily, we started scanning every disk image on our desk in a single day. And we could prioritize them for further manual analysis based on the scan reports.

Florian's article continues with a lot of great information regarding the use of scanners, and applying detection engineering to processing acquired images and data. He also provides examples of rules to identify the misuse/abuse of common tools.

All of this is great, and it's something we should all look to in our work, keeping two things in mind. First, if we're going to download and use tools created by others (Yara/Thor, plaso, RegRipper, etc.), we have to understand what the tools actually do. We can't make assumptions about the tools and the functionality they provide, as these assumptions lead to significant gaps in analysis. By way of example, in March, 2020, Microsoft published a blog article addressing human-operated ransomware attacks. In that article, they identified a ransomware group that used WMI for persistence, and the team I was engaged with at the time received data from several customers impacted by that ransomware group. However, the team was unable to determine if WMI had been used for persistence because the toolset they were using to parse data did not have the capability to parse the OBJECTS.DATA file. The collection process included this file, but the parsing process did not parse the file for persistence mechanisms, and as a result, analysts assumed that the data had been parsed and yielded a negative response.

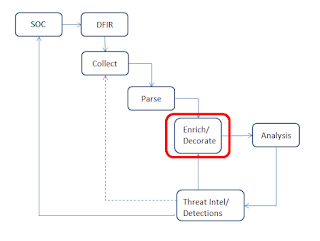

|

| Fig 1: New DFIR Model |

So, to truly leverage the efficiencies of Florian's scanner "bicycles", we need to continually extend them by baking findings developed through analysis, digging into open reports, etc., back into the process.

No comments:

Post a Comment