In my previous post, I presented some of the basic design elements for a structured approach to describing artifact constellations, and leveraging them to further DFIR analysis. As much of this is new, I'm sure that this all sounds like a lot of work, and if you've read the other posts on this topic, you're probably wondering about the benefits to all this work. In this post, I'll take shot at netting out some of the more obvious benefits.

Maintaining Corporate Knowledge

Regardless of whether you're talking about an internal corporate position or a consulting role, analysts are going to see and learn new things based on their analysis. You're going to see new applications or techniques used, and perhaps even see the same threat actor making small changes to their TTPs due to some "stimulus". You may find new artifacts based on the configuration of the system, or what applications are installed. A number of years ago, a co-worker was investigating a system that happened to have LANDesk installed, along with the software monitoring module. They'd found that the LANDesk module maintains a listing of executables run, including the first run time, the last run time, and the user account responsible for the last execution, all of which mapped very well in to a timeline of system activity, making the resulting timeline much richer in context.

When something like this is found, how is that corporate knowledge currently maintained? In this case, the analyst wrote a RegRipper plugin, that still exists and is in use today. But how are organizations (both internal and consulting teams) maintaining a record of artifacts and constellations that analysts discover?

Maybe a better question is, are organizations doing this at all?

For many organizations with a SOC capability, detections are written, often based on open reporting, and then tested and put into production. From there, those detections may be tuned; for internal teams, the tuning would be based on one infrastructure, but for MSS or consulting orgs, the tuning would be based on multiple (and likely an increasing number of) infrastructures. Those detections and their tuning are based on the data source (i.e., SIEM, EDR, or a combination), and serve to preserve corporate knowledge. The same approach can/should be taken with DFIR work, as well.

Consistency

One of the challenges inherent to large corporate teams, and perhaps more so to consulting teams, is that analysts all have their own way of doing things. I've mentioned previously that analysis is nothing more that an individual applying the breadth of their knowledge and experience to a data source. Very often, analysts will receive data for a case, and approach that data initially based on their own knowledge and experience. Given that each analyst has their own individual approach, the initial parsing of collected data can be a highly inefficient endeavor when viewed across the entire team. And because the approach is often based solely on the analyst's own individual experience, items of importance can be missed.

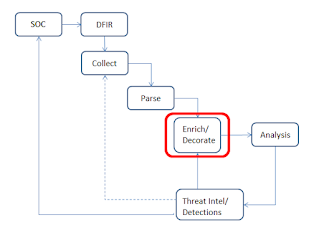

What if each analyst were instead able to approach the data sources based not just on their own knowledge and experience, but the collective experience of the team, regardless of the "state" (i.e., on vacation/PTO, left the organization, working their own case, etc.) of the other analysts? What if we were to use a parsing process that was not based on the knowledge, experience and skill of one analyst but instead on that of all analysts, as well as perhaps some developers? That process would normalize all available data sources, regardless of the knowledge and experience of an individual analyst, and the enrichment and decoration phase would also be independent of the knowledge and skill of a single analyst.

Now, something like this does not obviate the need for analysts to be able to conduct their own analysis, in their own way, but it does significantly increase efficiency, as analysts are no longer manually parsing individual data sources, particularly those selected based upon their own experience. Instead, the data sources are being parsed, and then enriched and decorated through an automated means, one that is continually improved upon. This would also reduce costs associated with commercial licenses, as teams would not have to purchase each analyst licenses for several products (i.e., "...I prefer this product to this work, and this other product for these other things...").

By approaching the initial phases of analysis in such a manner, efficiency is significantly increased, cost goes way down, and consistency goes through the roof. This can be especially true for organizations that encounter similar issues often. For example, internal organizations protecting corporate assets may regularly see certain issues across the infrastructure, such as malicious downloads or phishing with weaponized attachments. Similarly, consulting organizations may regularly see certain types of issues (i.e., ransomware, BEC, etc.) based on their business and customer base. Having an automated means for collecting, parsing, and enriching known data sources, and presenting them to the analyst saves time and money, gets the analyst to conducting analysis sooner, and provides for much more consistent and timely investigations.

Artifacts in Isolation

Deep within DFIR, behind the curtains and under the dust ruffle, when we see what really goes on, we often see analysts relying far too much on single sources of data or single artifacts for their analysis, in isolation from each other. This is very often the result of allowing analysts to "do their own thing", which while sounding like an authoritative way to conduct business, is highly inefficient and fraught with mistakes.

Not long ago, I heard a speaker at a presentation state that they'd based their determination of the "window of compromise" on a single data point, and one that had been misinterpreted. They'd stated that the ShimCache/AppCompatCache timestamp for a binary was the "time of execution", and extended the "window of compromise" in their report from a few weeks to four years, without taking time stomping into account. After the presentation was over, I had a chat with the speaker and walked through my reasoning. Unfortunately, the case they'd presented on had been completed (and adjudicated) two years prior to the presentation. For this case, the victim had been levied a fine based on the "window of compromise".

Very often, we'll see analysts referring to a single artifact (ShimCache entry, perhaps an entry in the AmCache.hve file, etc.) as definitive proof of execution. This is perhaps an understanding based on over-simplification of the nature of the artifact, and without corresponding artifacts from the constellation, will lead to inaccurate findings. It is often not until we peel back the layers of the analysis "onion" that it becomes evident that the finding, as well as the accumulated findings of the incident, were incorrectly based on individual artifacts, from data sources viewed in isolation from other pertinent data sources. Further, the nature of those artifacts being misinterpreted; rather than demonstrating program execution, they simply illustrate the existence of a file on the system.

Summary

Over the years that I've been doing DFIR work, little in the way of "analysis" has changed. We started out with getting a few hard drives that we imaged and analyzed, to going on-site to do collections of images, then to doing triage collections to scope the systems that needed to be analyzed. We then extended our reach further through the use of EDR telemetry, but analysis still came down to individual analysts applying just their own knowledge and experience to collected data sources. It's time we change this model, and leverage the capabilities we have on hand in order to provide more consistent, efficient, accurate, and timely analysis.