Within the DFIR and threat intel communities, there has been considerable talk about "TTPs" - tactics, techniques and procedures used by targeted threat actors. The most challenging aspect of this topic is that there's a great deal of discussion of "having TTPs" and "getting TTPs", but when you really look at something hard, it kind of becomes clear that you're gonna be left wondering, "where're the TTPs?" I'm still struggling a bit with this, and I'm sure others are, as well.

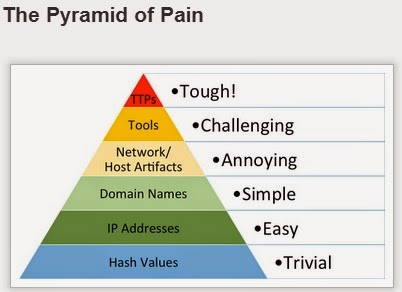

I ran across Jack Crook's blog post recently, and didn't see how just posting a comment to his article would do it justice. Jack's been sharing a lot of great stuff, and there's a lot of great stuff in this article, as well. I particularly like how Jack tied what he was looking at directly into the Pyramid of Pain, as discussed by David Bianco. That's something we don't see often enough...rather than going out and starting something from scratch, build on some of the great stuff that others have done. Jack does this very well, and it was great to see him using David's pyramid to illustrate his point.

A couple of posts that might be of interest in fleshing out Jack's thoughts are HowTo: Track Lateral Movement, and HowTo: Determine Program Execution.

More than anything else, I would suggest that the pyramid that David described can be seen as a indicator of the level of maturity of the IR capability within an organization. What this means is that the more you've moved up the pyramid (Jack provides a great walk-through of moving up the pyramid), the more mature your activity tends to be, and when you've matured to the point where you're focused on TTPs, you're actually using a process, rather than simply looking for specific data points. And because you have a process, you're going to be able to not only detect and respond to other threats that use different TTPs, but you'll also be able to detect when those TTPs change. Remember, when it comes to these targeted threats, you're not dealing with malware that simply does what it does, really fast and over and over again. Your adversary can think and change what they do, responding to any perceived stimulus.

Consider the use of PSExec (a tool) as means to implement lateral movement. If you're looking for hashes, you may miss it...all someone has to do is flip a single bit within the PE file itself, particularly one that has no consequence on the function of the executable, and your detection method has been obviated. If a different tool (there are a number of variants...) is used, and you're looking for specific tools, then similarly, your detection method is obviated. However, if your organization has a policy that such tools will not be used, and it's enforced, and you're looking for Service Control Manager event records (in the System Event Log) with event ID 7045 (indicating that a service was installed), you're likely to detect the use of the tool on the destination systems, as well as the use of other similar tools. In the case of more recent versions of Windows, you can then look at other event records in order to determine the originating system for the lateral movement.

Book

Looking for Service Control Manager/7045 events is one of the items listed on the malware detection checklist that goes along with chapter 6 of Windows Forensic Analysis, 4/e.

Looking for Service Control Manager/7045 events is one of the items listed on the malware detection checklist that goes along with chapter 6 of Windows Forensic Analysis, 4/e.

When it comes to malware, I would agree with Jake's blog post regarding not uploading malware/tools that you've found to VT, but I would also suggest that the statement, "...they create a new piece of malware, with a unique hash, just for you..." falls short of the bigger issue. If you're focused on hashes, and specific tools/malware, yes, the bad guy making changes is going to have a huge impact on your ability to detect what they're doing. After all, flipping a single bit somewhere in the file that does not affect the executionof the program is sufficient to change the hash. However, if you're focused on TTPs, your protection and detection process will likely sweep up those changes, as well. I get it that the focus of Jake's blog post is to make the information more digestible, but I would also suggest that the bar needs to be raised.

Uploading to VT

One issue not mentioned in Jake's post is that if you upload a sample that you found on your infrastructure, you run the risk of not only letting the bad guy know that his stuff has been detected, but more than once responders have seen malware samples include infrastructure-specific information (domains, network paths, credentials, etc.) - uploading that sample exposes the information to the world. I would strongly suggest that before you even consider uploading something (sample, hash) to VT, you invest some time in collecting additional artifacts about the malware itself, either though your own internal resources or through the assistance of experts that you've partnered with.

One issue not mentioned in Jake's post is that if you upload a sample that you found on your infrastructure, you run the risk of not only letting the bad guy know that his stuff has been detected, but more than once responders have seen malware samples include infrastructure-specific information (domains, network paths, credentials, etc.) - uploading that sample exposes the information to the world. I would strongly suggest that before you even consider uploading something (sample, hash) to VT, you invest some time in collecting additional artifacts about the malware itself, either though your own internal resources or through the assistance of experts that you've partnered with.

A down-side of this pyramid approach, if you're a consultant (third-party responder), is that if you're responding to a client that hasn't engineered their infrastructure to help them detect TTPs, then what you've got left is the lower levels of the pyramid...you can't change the data that you've got available to you. Of course, your final report should make suitable recommendations as to how the client might improve their posture for responding. One example might be to ensure that systems are configured to audit at a certain level, such as Audit Other Object Access - by default, this isn't configured. This would allow for scanning (via the network, or via a SIEM) for event ID 4698 records, indicating that a scheduled task was created. Scanning for these, and filtering out the known-good scheduled tasks within your infrastructure would allow for this TTP to be detected.

For a good example of changes in TTPs, take a look at this CrowdStrike video that I found out about via Twitter, thanks to Wendi Rafferty. The speakers do a very good job of describing changes in TTPs. One thing from the video, however...I wouldn't think that a "new approach" to forensics is required, per se, at least not for response teams that are organic to an organization. As a consultant, I don't often see organizations that have enabled WMI logging, as recommended in the video, so it's more a matter of a combination of having an established relationship with your client (minimize response time, maximize available data), and having a detailed and thorough analysis process.

Regarding TTPs, Ran2 says in this EspionageWare blog post, "Out of these three layers, TTP carries the highest intelligent value to identify the human attackers." While being the most valuable, they also seem to be the hardest to acquire and pin down.

15 comments:

Thanks for the comments, Harlan. One of the things David Bianco has been preaching since I have known him is to make life harder for the attackers, which I completely agree with. As you move up the pyramid with your detection strategy, you improve your capabilities to detect their tendencies and not necessarily their tools. Lets face it, humans are creatures of habit and we don't necessarily like to change things that already work. We can often use the habits of our adversaries to improve our detection.

Identifying these TTP's and building accurate detection for them may be difficult at first, but the more you see certain attackers the more their tendencies

will stand out. I have also seen overlap with various groups, so one solid detection rule may cover more than people are aware of.

One of the things that I struggle with is how do we take these TTP's that we see through our analysis and convey what they mean to others that may be analyzing events. These SOC people may see the alert, but not necessarily understand the importance of the meaning behind the alert. Unfortunately a lot of what we do and know is tribal knowledge that we gain from being in the thick of things and responding over and over. What may stand out as odd to me, may seem like nothing to someone who has not seen it before as we are often alerting on legitimate behavior. I think it's important that all detection is documented and conveys the reason why we are detecting on these indicators, but is still hard if you have never seen it before.

... may be difficult at first...

Any change to what we normally do, to the status quo, is difficult, I agree. But sometimes, that little change, that seems so painful, reaps much more in prevention and detection.

Unfortunately a lot of what we do and know is tribal knowledge...

Agreed...in both senses. The fact that it's "tribal" knowledge makes it unfortunate that it isn't documented, shared, and then used in some manner.

I think that what needs to happen is a move to change this at the grass roots level.

Jack,

Just a bit of a follow-up...

In my experience, it would seem that TTPs aren't being shared because they aren't being looked for, in part due to the 'status quo'. However, there's also the fact that many folks in the 'threat intel' industry (I don't think that the term "community" fits...) only go after what they know. Malware RE guys go after stuff specific to what can be found through some malware RE activities; similarly, network folks go after network-based artifacts.

The way to address this is to combine the capabilities brought to bear through host-, network-, and memory-based analysis. Saying, "we don't have that..." to any particular part of that...host-based artifacts, memory, etc...is a limiting factor that we must get passed. Do you only have a malware sample b/c it was downloaded from VT or b/c a client "threw it over the fence"? If that's the case, we really have to consider, can we _call it_ "threat intel", if all it's based on is reversing a single sample, without the benefit of context?

Harlan, I like your comment that the pyramid can also be used to measure detection maturity. I've been saying that for a while now. It's not only the pain you can cause the adversary, but it's also a measure of the effort you have to put forth to operate on the higher levels.

I don't agree, however, that consultants coming in to an org that is operating low on the pyramid is a "downside" of the model. It's actually a situation where the model is quite helpful, because it gives you a yardstick against which to measure the organization's detection capabilities. You can point to it and say "You're right *here*, which is only level 3. If you make these changes, you could get to level 5." Tying improvements to something concrete makes it easier for the customer to understand and prioritize.

David,

Thanks for the comment.

I tend to believe that if you're at the point within your organization where you're tracking TTPs (or capable of doing so) then your organization has matured to the level where you're also able to see changes in those TTPs.

It's actually a situation where the model is quite helpful...

I agree; however, what I was referring to when I made that statement was that many times, I hear from other consultants, "okay, great, but how do I get to determining TTPs if all the client that we respond to has is X data?"

I completely agree that the pyramid can be used exactly as you described...consultants can use it to illustrate to the client where they are in the pyramid, and where they can progress to if they implement the recommendations provided in the report.

True. That's an area where the typical fuzziness in detection works to our favor. Usually, it's difficult to nail down your detection to *exactly* the scenario you are trying to capture. We tend to view this as a bad thing that should be improved, and forget that this is just the sort of thing we need if we want to be able to capture incremental changes or variances in the TTPs.

So yeah, if you can detect TTPs, you're likely to detect the changes to them over time (assuming you're paying attention). That's why defenders operating at the TTP level are so difficult for the adversaries to deal with.

Also, re: sharing of TTPs... They are shared, but they are not as widely available as lower-level pyramid indicators. Partly, this is because they are so difficult to collect that many organizations place a higher value on them. This makes them more reluctant to share, for fear of the TTP being 'blown'.

I don't necessarily buy this idea, though. Once your investigation and response is finished, publishing the TTPs allows others to take advantage of the work you did (and hopefully you in turn would be able to take advantage of theirs). But the idea of the Pyramid of Pain is that we *want* the attackers to work hard, and if the TTP really does get "blown", that at least makes them expend more effort to create new TTPs. I don't agree that publication always makes the adversaries change their tactics, but if it did, I'd welcome that.

Another reason TTPs are rarely shared is that they are more difficult to share in an automated fashion. It's easy to post lists of domains, or even signatures for detecting artifacts or tools. There's no good way to express the abstracts involved in TTPs, though. So far, the best I've seen is plain English text, which makes it harder to both produce and to import into tools.

I don't necessarily buy this idea, though.

Agreed. I understand that folks don't want TTPs blown, but I don't think that when they say that, they really realize that what they're saying is that they're ability to find the TTPs in the first place is so limited that they won't detect changes.

...makes it harder to both produce and to import into tools.

That's another limitation we're placing on ourselves..."we don't share TTPs b/c we don't have a common language that allows automated import into tools." Eesh. This is an excuse. The issue is that no two infrastructures are the same...even if we had access to some sort of application that would ingest TTPs and implement them into automated tools, does anyone know what effect that would have on the infrastructure?

Also, doesn't this go against everything we know about change control?

The ability to detect TTPs suggests a certain level of maturity within an organization; being able to ingest TTPs in some automated fashion requires a level of a maturity in the design and implementation of the network infrastructure and endpoints.

This is a great discussion...too bad that it's just us three.

I think that there's a lot of room for discussion regarding locating, documenting, employing, and sharing TTPs. So far, however, I think that across the board, discussions have been at a high enough level that most folks may see it (the discussion itself) as out of reach.

I would suggest that the first step is that more people need to be engaged in the discussion. I don't mean by throwing in a comment that is flippant, humorous, or completely derails the discussion...I mean something that actually helps advance the discussion.

For example, what are _your_ limitations to detecting and documenting TTPs during a targeted threat IR engagement? What prevents you from doing this? Lack of data, skill, time?

This is nothing new...this goes back a long way. Even on the ISS ERS team in early 2006, we couldn't get other analysts to share indicators at that time, so when we moved into doing PCI work (targeted threats), many analysts (not just on our team) were struggling just to do the minimum, and finding and documenting TTPs was pretty much out of the question.

Id like to comment on the hash look up example you mentioned in the article. i would agree that a hash data base of known good or known bad is not enough. as you said an attacker can change one bit and the hash is useless. but what if you incorporate a fuzzy hash scanning mechanism? that would make the bit change insignificant as changing one bit would still make the new version very smiler to the original sample in the data base and still make a good trigger or indicator. wouldn't you agree?

Adi, I think fuzzy hashes are a little different than regular hashes like MD5 or SHA1. Fuzzy hashes of known malicious binaries or tools used in an attack would definitely have a higher pyramid value. I tend to think of those as being on either the artifact or the tool levels, depending on the exact circumstance and my mood that day.

That said, I don't encounter them being used for detection very often, which is why I don't specifically try to integrate them into the Pyramid. When I see them, they are usually integrated into someone's sandbox, where they use the fuzzy hash to try to classify samples and relate them to other things they already have identified. This is related to detection, for sure, but usually they are not used directly to detect evil.

Adi,

As David pointed out, fuzzy hashes are of higher value, perhaps than just 'regular' hashes, but what happens when the intruder stops using malware?

thanks for the response. im not suggesting that you should use fuzzy hashes a the main or only source for detection. im just saying it can be an effective tool in our arsenal as Incident Responders. obviously you should combine different techniques in the process of responding to an incident.

Good stuff - this is the kind of explanation I point toward those in the management chain that pretend to get it, but don't.

As I approach the TTP and IOC dilemma the challenge is while there is a higher pain point for the adversary based on more complex detection/understanding of their operations, we still can't abandon the lower level stuff - imagine the DFIR person who has to report to management their enterprise was compromised for over a year and was calling out to a published C2.

As an industry striking that right balance for automating the lower value easily mutable indicators so we can focus on more mature methods to detect adversaries is still a big "need" in my opinion.

TT,

I get what you're saying, and I don't think that anyone is suggesting that the lower level (re: PoP) indicators be abandoned.

Quite the opposite, in fact...I think that what we can all agree on is that if a detection methodology relies solely on those indicators, then the defender is going to need to play "catch up" with the intruder, should the intruder change any of those indicators.

However, if your detection process is more mature, higher up the PoP, then you're not behind when the intruder changes their approach; in fact, your detection of the lower level indicators is already automated, as is your ability to detect when the intruder uses new C2, IPs, tools, etc.

Post a Comment