Dr. Ali Hadi recently posted another challenge image, this one (#7) being a lot closer to a real-world challenge than a lot of the CTFs I've seen over the years. What I mean by that is that in the 22+ years I've done DFIR work, I've never had a customer pose more than 3 to 5 questions that they wanted answered, certainly not 51. And, I've never had a customer ask me for the volume serial number in the image. Never. So, getting a challenge that had a fairly simple and straight forward "ask" (i.e., something bad may have happened, what was it and when??) was pretty close to real-world.

I will say that there have been more than a few times where, following the answers to those questions, customers would ask additional questions...but again, not 37 questions, not 51 questions (like we see in some CTFs). And for the most part, the questions were the same regardless of the customer; once whatever it was was identified, questions of risk and reporting would come up, was any data taken, and if so, what data?

I worked the case from my perspective, and as promised, posted my findings, including my case notes and timeline excerpts. I also added a timeline overlay, as well as MITRE ATT&CK mappings (with observables) for the "case".

Jiri Vinopal posted his findings in this tweet thread; I saw the first tweet with the spoiler warning, and purposely did not pursue the rest of the thread until I'd completed my analysis and posted my findings. Once I posted my findings and went back to the thread, I saw this comment:

"...but it could be Windows server etc..so prefetching could be disabled..."

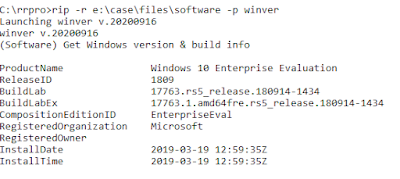

True, the image could be of a Windows server, but that's pretty trivial to check, as illustrated in figure 1.

|

| Fig 1: RRPro winver.pl plugin output |

Checking to see if Prefetching is enabled is pretty straightforward, as well, as illustrated in figure 2.

|

| Fig 2: Prefetcher Settings via System Hive |

If prefetching were disabled, one would think that the *.pf files would simply not be created, rather than having several of them deleted following the installation of the malicious Windows service. The Windows Registry is a hierarchal database that includes, in part, configuration information for the Windows OS and applications, replacing the myriad configuration and ini files from previous versions of the OS. A lot of what's in the Registry controls various aspects of the Windows eco-system, including Prefetching.

In addition to Jiri's write-up/tweet thread of analysis, Ali Alwashali posted a write-up of analysis, as well. If you've given the challenge a shot, or think you might be interested in pursuing a career in DFIR work, be sure to take a look at the different approaches, give them some thought, and make comments or ask questions.

Remediations and Detections

Jiri shared some remediation steps, as well as some IOCs, which I thought were a great addition to the write-up. These are always good to share from a case; I included the SysInternals.exe hash extracted from the AmCache.hve file, along with a link to the VT page, in my case notes.

What are some detections or threat hunting pivot points we can create from these findings? For many orgs, looking for new Windows service installations via detections or hunting will simply be too noisy, but monitoring for modifications to the /etc/hosts file might be something valuable, not just as a detection, but for hunting and for DFIR work.

Has anyone considered writing Yara rules for the malware found during their investigation of this case? Are there any other detections you can think of, for either EDR or a SIEM?

Lessons Learned

One of the things I really liked about this particular challenge is that, while the incident occurred within a "compressed" timeframe, it did provide several data sources that allowed us to illustrate where various artifacts fit within a "program execution" constellation. If you look at the various artifacts...UserAssist, BAM key, and even ShimCache and AmCache artifacts...they're all separated in time, but come together to build out an overall picture of what happened on the system. By looking at the artifacts together, in a constellation or in a timeline, we can see the development and progression of the incident, and then by adding in malware RE, the additional context and detail will build out an even more complete picture.

Conclusions

A couple of thoughts...

DFIR work is a team effort. Unfortunately, over the years, the "culture" of DFIR has been one that has developed into a bit of a "lone wolf" mentality. We all have different skill sets, to different degrees, as well as different perspectives, and bringing those to bear is the key to truly successful work. The best (and I mean, THE BEST) DFIR work I've done during my time in the industry has been when I've worked as part of team that's come together, leveraging specific skill sets to truly deliver high-quality analysis.

Thanks

Thanks to Dr. Hadi for providing this challenge, and thanks to Jiri for stepping up and sharing his analysis!

No comments:

Post a Comment